‘Embedding & X-Frame-Options Response Header’

During a recent project, I came face to face with both the power and peril of embeddable content. The premise of my recent project Placemat was to remove the friction that people encounter when sending content rich links (videos, music, articles, etc.) to one another. Sharing links is usually done through email, text messaging or on a social network. However none of these solutions allow a user to engage with all the content being sent to them in a single place. Additionally, users cannot persist these links in their own personal library or share their new knowledge short of sending another email, text, etc. and continuing the cycle. Embedding, when done right, can condense the vastness of the web into concise bits of knowledge. However, when allowing users to embed anything and everything, there are some serious hurdles to overcome.

After we began development on our project, it wasn’t long before my collaborators and I encountered a ‘Same Origin’ error when trying to generate an iframe with a generic YouTube url. After the same thing happened for a handful of other sites, I turned to StackOverflow only to realize that this is a common problem.

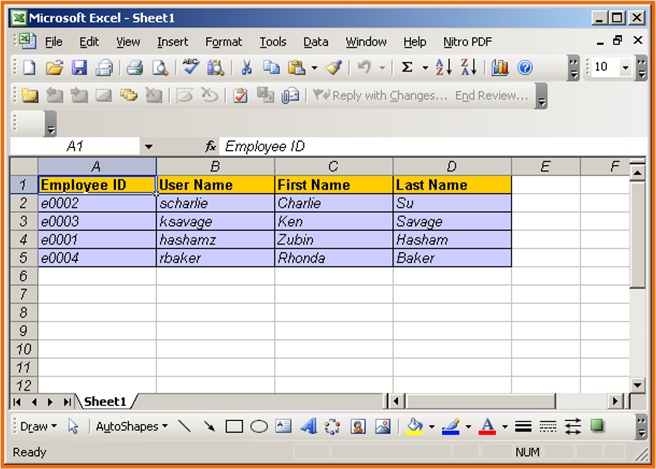

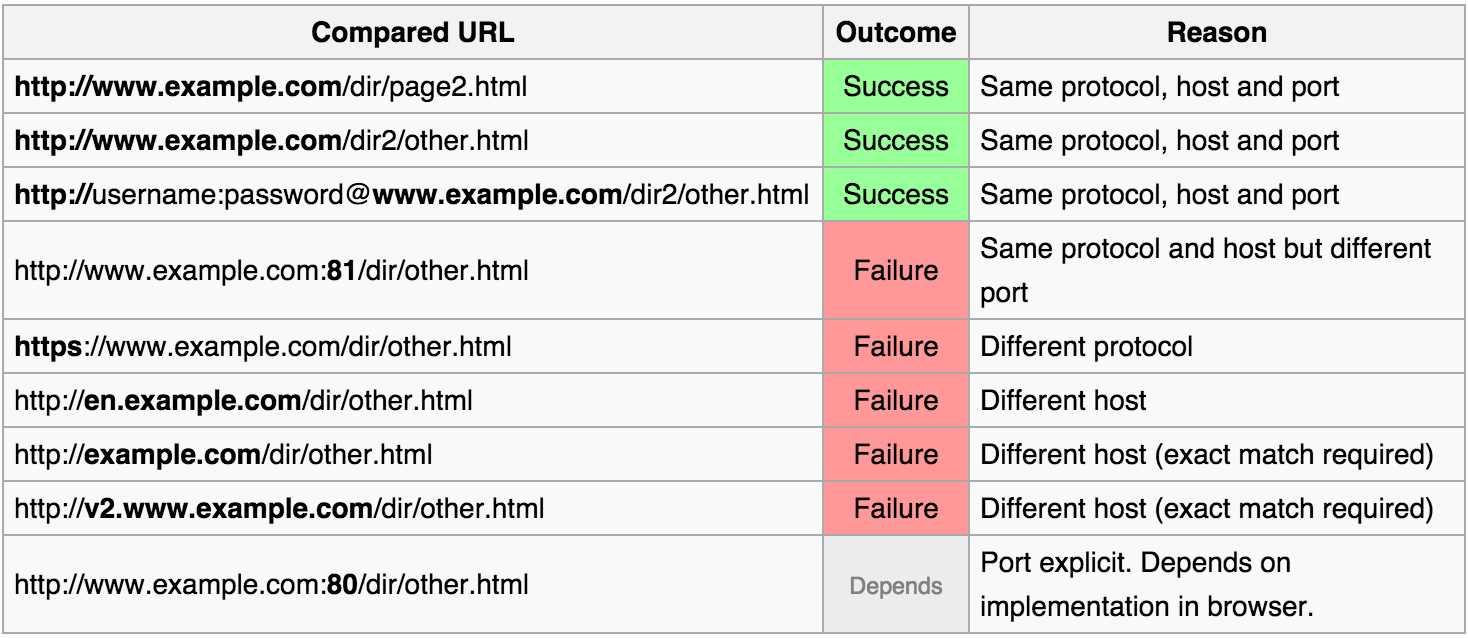

The “Same-Origin” security policy dictates that a web browser may only permit scripts contained in a first web page to access data in a second web page if both web pages have the ‘same origin’. This means that a second web page must match the first in protocol, host and port. This table from Wikipedia gives a good breakdown of how this works in practice.

Same Origin is one of three possible X-Frame-Options. These X-Frame options are included in an HTTP response header so a site can decide whether or not they want to permit their site to be embedded in a “frame”, “iframe” or “object”. A page can ‘Deny’ display in a frame or ‘Allow-From uri’, meaning a page can only be displayed on the specified origin.

Many popular sites offer embeddable versions of their content for developers to use on their own sites. The following two links reference the same video.

The embeddable version does not have many of the familiar features users are accustomed to. There is no like button or comment section, just a barebones player. One of the motivations for sites like YouTube to format their embeddable content in this way is to prevent ‘Clickjacking’. Wikipedia describes this as, “a malicious technique of tricking a Web user into clicking on something different from what the user perceives they are clicking on.” For example, if a regular YouTube page could be embedded on another site, visitors of the second site could be “clickjacked” to end up clicking a like button or leaving a comment. Same-Origin is one of the defenses that YouTube employs to combat this and maintain the integrity of the viewing metrics of their community.

While many popular sites offer embeddable iframes for their content, there is no standardization in their format. Given the requirements that Placemat had to take a url entered by a user and generate embeddable content, we were faced with a challenge. It was not feasible to create custom logic for every major content site. Even if we did have the time to do this, we faced another challenge where certain sites (Vevo) prevent their content from being embedded on other sites with the exception of a few whitelisted partners. Thankfully, we found a solution in Embedly.

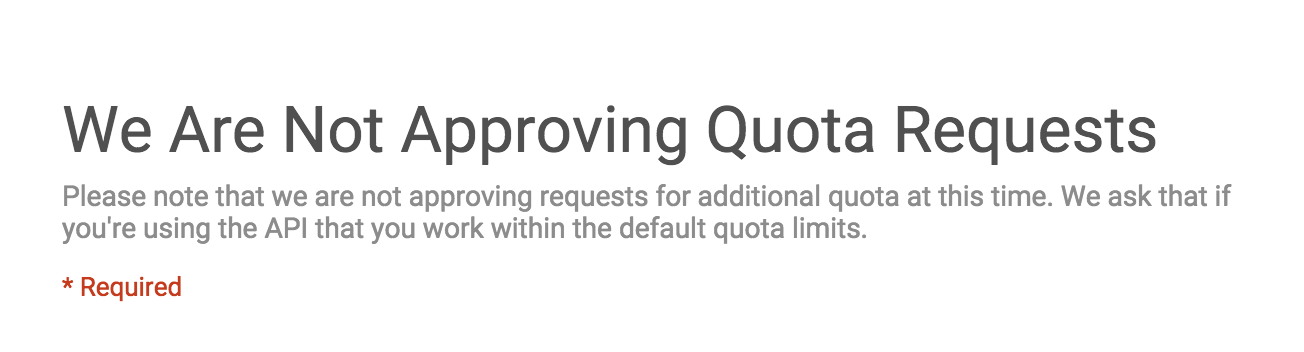

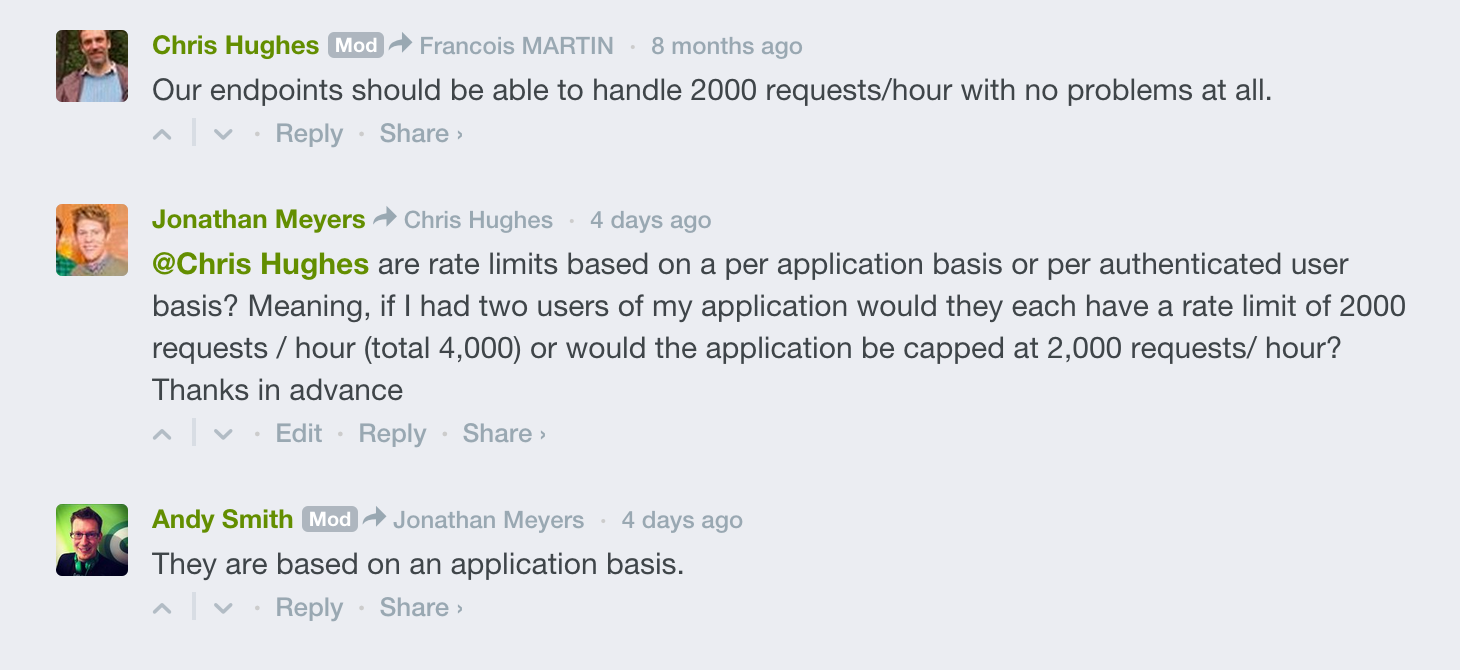

Embedly turns any link into an embeddable ‘card’. They have over 250 content partners, which means the majority of our users’ content would be formatted in a way that would be optimized its source. Additionally, they are a whitelisted partner of many sites, that otherwise would be unembeddable. Embedly does employ a rate limit for using its API, however there is no rate limit for converting links to cards with their javascript library. It works by simply adding a class of “embedly-card” to any tag and including the their javascript file on the page (see an example included in this page below).

Looking forward….

The rise of user generated content has flooded the web with more things than ever to watch, listen and read. While there are only a few popular platforms hosting this content, there are an endless amount of taste makers embedding this content on their own sites across the web. These taste makers give a sense of direction and context to an otherwise chaotic content eco-system. Embedding will be a critical part of the web going forward and the tools that will make this possible are still very much in their infancy.

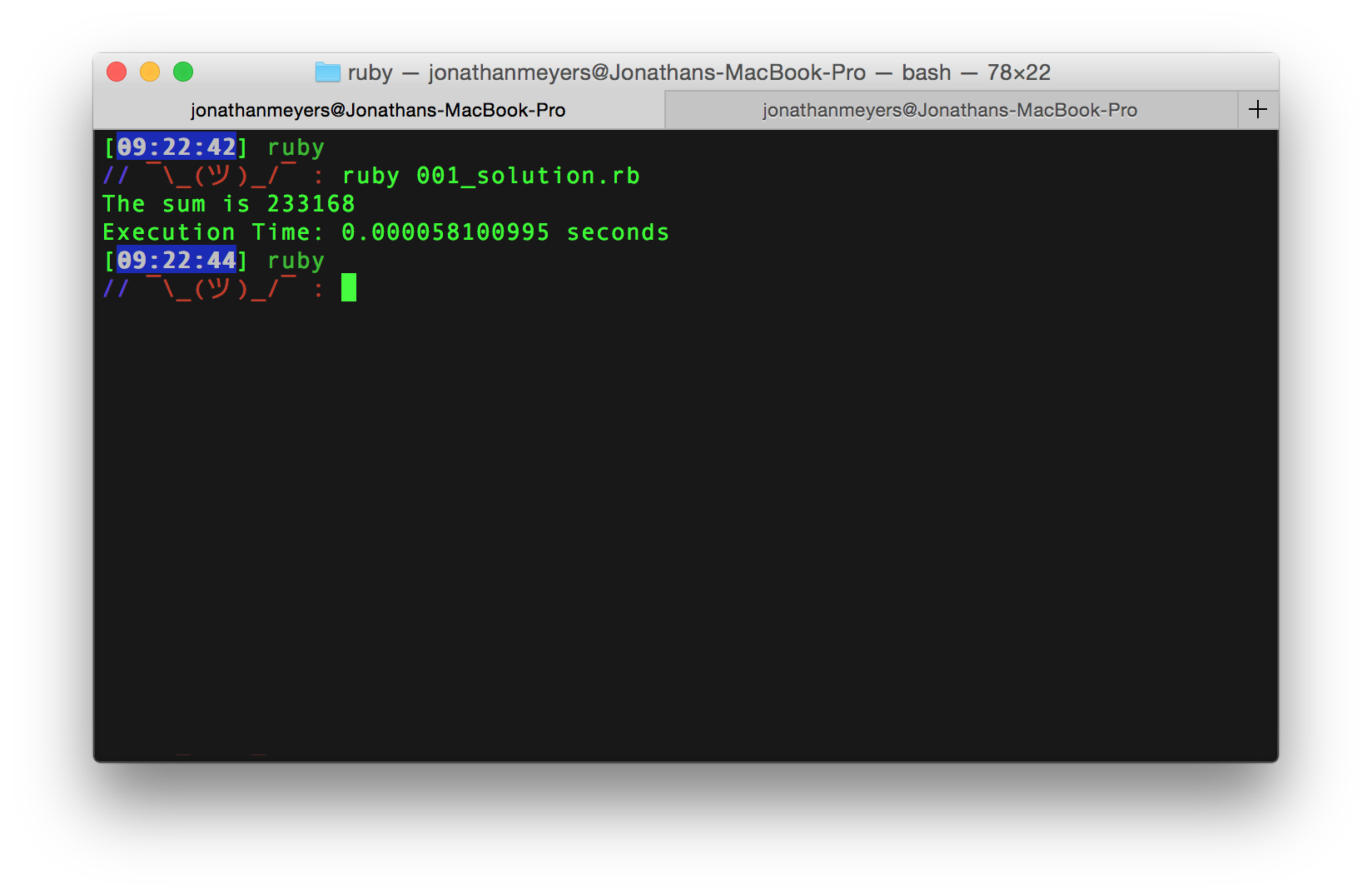

…not quite.

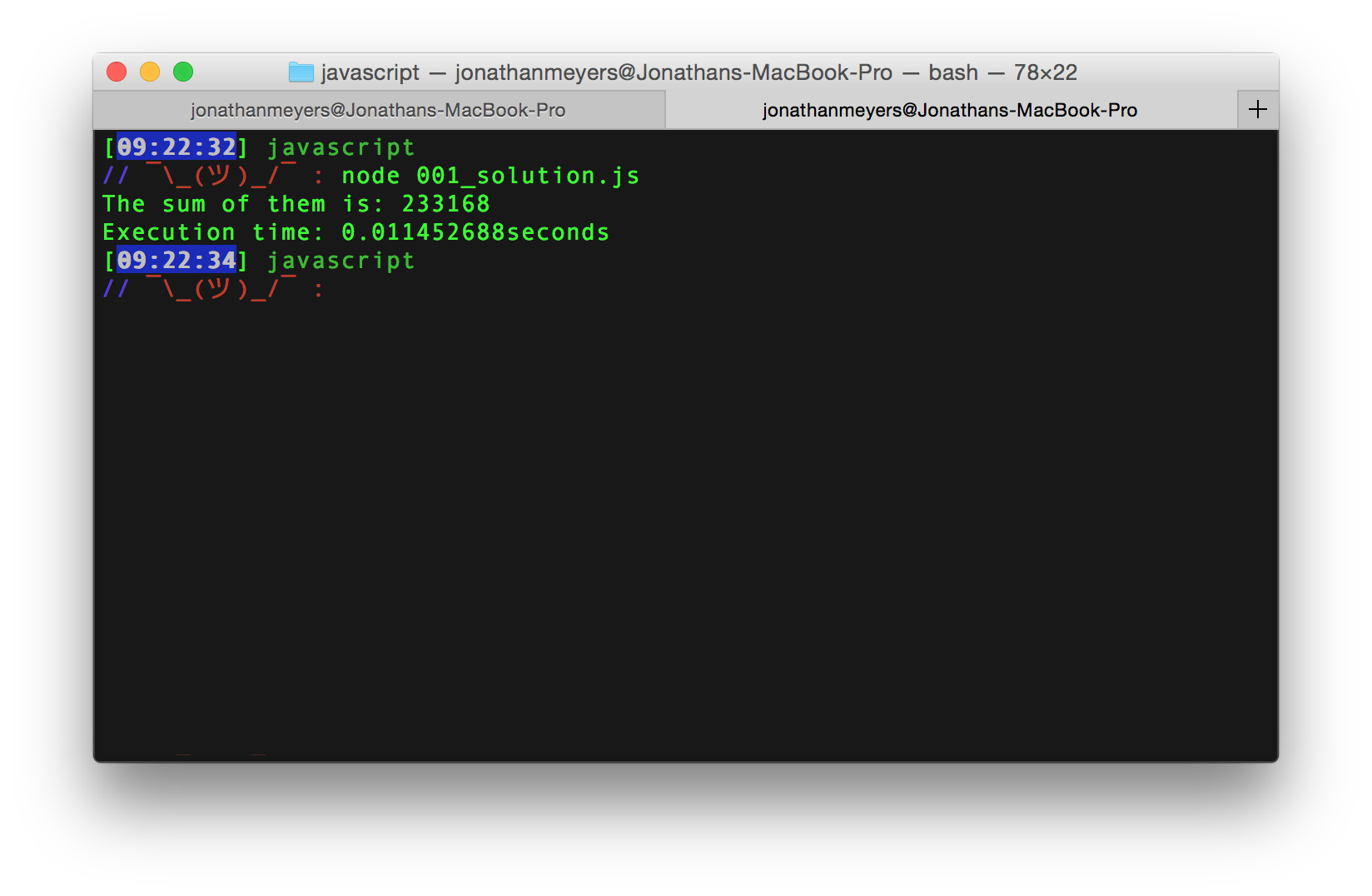

…not quite.